Marc Duiker

Oct 17 2023

Dapr v1.12 Release Highlights

The Dapr maintainers released a new version of Dapr, the distributed application runtime, last week. Dapr provides APIs for communication, state, and workflow, to build secure and reliable microservices. This post highlights the major new features and changes for the APIs and components in release v1.12.

APIs

Dapr provides an integrated set of APIs for building microservices quickly and reliably. The new APIs, and major upgrades to existing APIs, are described in this section.

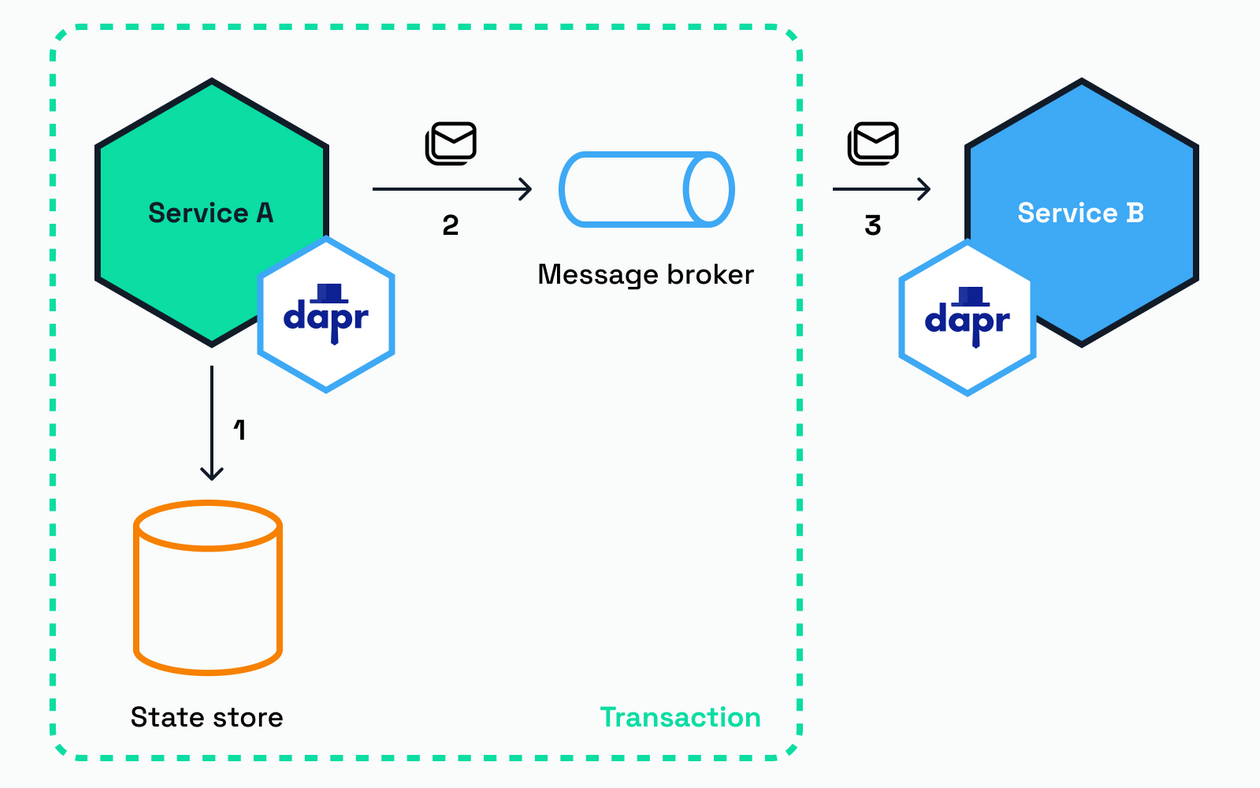

Outbox pattern

This transactional outbox pattern is added to the transactional state management API. This pattern allows committing a single transaction across a state store and a pub/sub message broker, and is ideal for sending notifications when changes occur in application state. The outbox pattern is compatible with all transactional Dapr state stores, and all pub/sub brokers that Dapr can use.

A typical use case for the outbox pattern is:

- Adding a new user account to a state store.

- Publishing a message that the account has been successfully added.

To configure the outbox pattern, the following fields need to be specified in the state management component file:

- outboxPublishPubsub; this is the name of the pub/sub component.

- outboxPublishTopic; this is the name of the pub/sub topic.

MySQL state store example with outbox pattern specification

apiVersion: dapr.io/v1alpha1kind: Componentmetadata:name: mysql-outboxspec:type: state.mysqlversion: v1metadata:- name: connectionStringvalue: "<CONNECTION STRING>"- name: outboxPublishPubsub # Requiredvalue: "mypubsub"- name: outboxPublishTopic # Requiredvalue: "newOrder"

To use the outbox pattern in your application, use the state management transaction API:

POST/PUT http://localhost:<daprPort>/v1.0/state/<storename>/transaction

More information on outbox configuration, supported state stores, and usage of the transactional outbox pattern can be found in the docs.

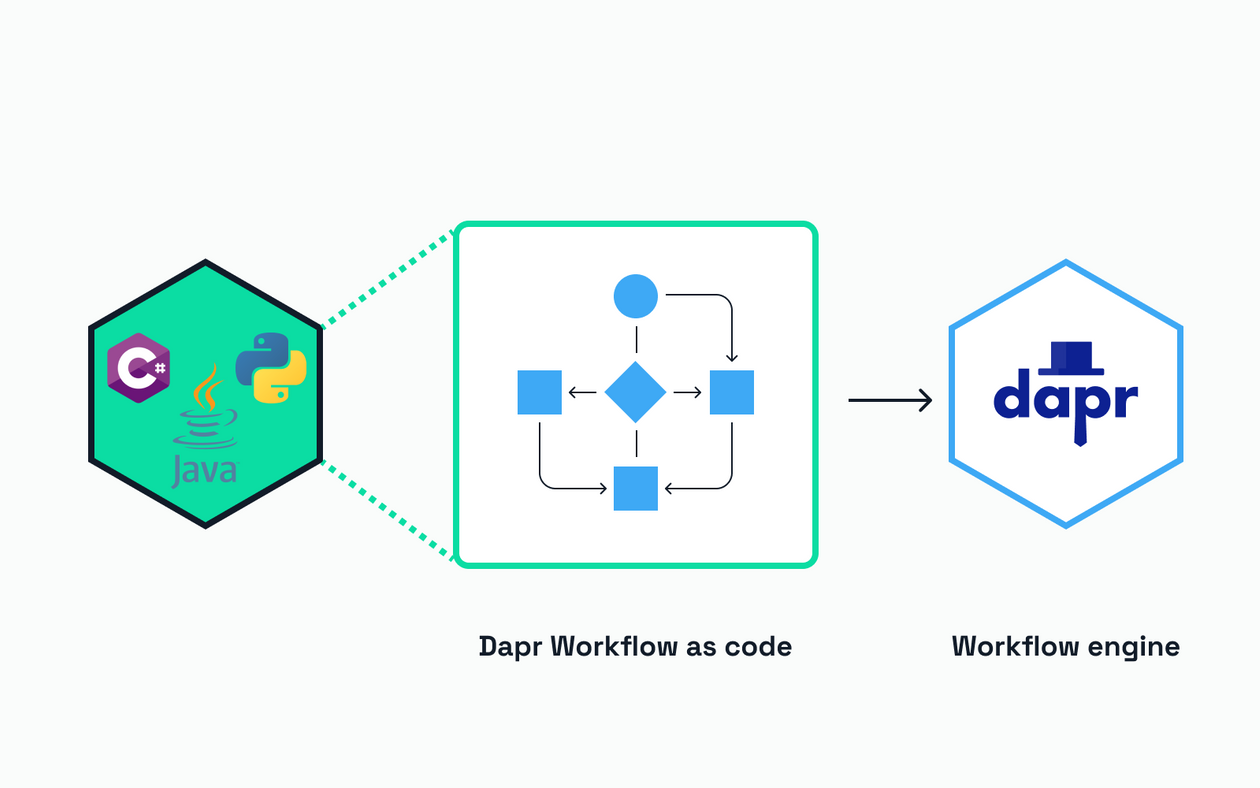

Workflow now in beta

Dapr workflow enables developers to orchestrate business logic for messaging and state management across various microservices. The Dapr Workflow API has progressed to beta maturity level with release 1.12. This means that:

- It’s not production ready yet.

- Recommended for non-business-critical use.

- Development will continue until stable maturity level is reached.

- The API contract is close to finalization.

- Supports several Dapr language SDKs, in this case, .NET, Python, and Java.

- Performance should be production-ready, but may not be achieved in all cases.

Authoring Workflows in Java

Workflow authoring was already supported for .NET and Python. Now, Java can be used to author workflows as well. This requires a dependency on the io.dapr.workflow package that contains the necessary Workflow type definitions.

See the Dapr java-sdk GitHub repository for a full workflow demo.

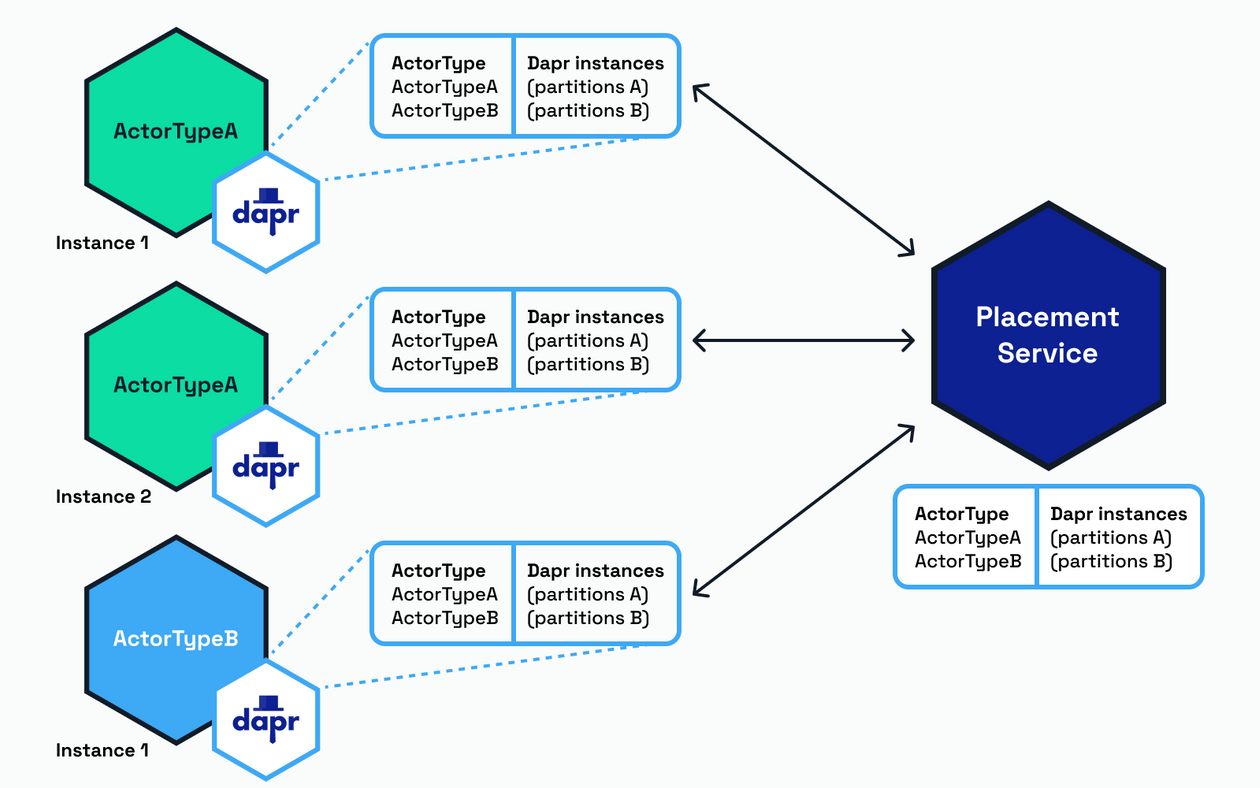

Improvements to Dapr Actors and Placement APIs

Many improvements have been made to the Dapr Actors API, including bug fixes and performance improvements.

-

The Placement service has a new placement API that allows examination of the placement tables to find out which types of actors are active.

GET http://localhost:<healthzPort>/placement/stateThe response contains an array of actor host info:

{"hostList": [{"name": "198.18.0.1:49347","appId": "actor1","actorTypes": ["testActorType1", "testActorType3"],"updatedAt": 1690274322325260000},{"name": "198.18.0.2:49347","appId": "actor2","actorTypes": ["testActorType2"],"updatedAt": 1690274322325260000},{"name": "198.18.0.3:49347","appId": "actor2","actorTypes": ["testActorType2"],"updatedAt": 1690274322325260000}],"tableVersion": 1}For more information, read the Placement API reference in the docs.

-

API calls to Actor and Workflow endpoints are now blocking while the Dapr Actor runtime is initialized, so applications are do not need to implement this readiness check any longer.

-

Performance improvements have been made to Actor Reminders to prevent multiple re-evaluations when multiple sidecars are online/offline at the same time.

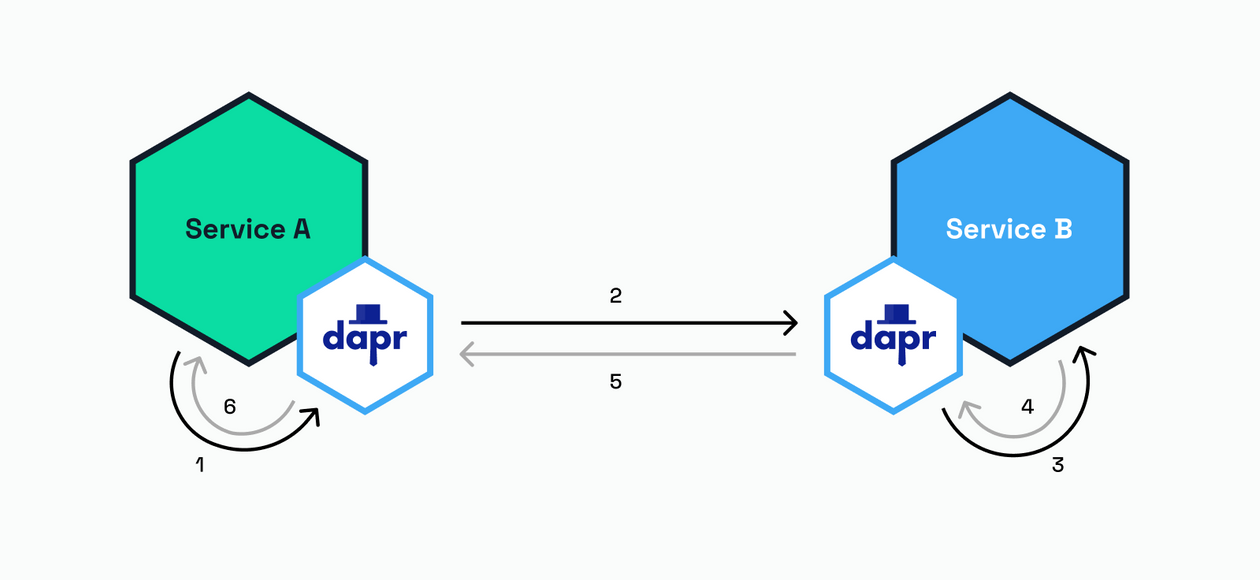

Service Invocation: HTTP streaming

The HTTP service invocation API now uses streaming by default, which enhances the overall performance of HTTP service invocation, especially when using large request or response bodies.

Users who use HTTP service invocation will experience improvements such as reduced memory usage, much smaller time-to-first-byte (TTFB), and the ability to send messages in chunks.

More information about service invocation with HTTP streaming can be found in the docs.

Components

Dapr decouples the functionality of the integrated set of APIs with their underlying implementations via components. Components of the same type are interchangeable since they implement the same interface. Release 1.12 contains both new components as improvements to existing components.

New components

The Azure OpenAI binding has been added to this release. This output binding allows communication with the Azure OpenAI service. It supports three operations:

- completion; this generates responses on a prompt.

- chat-completion; this generates responses for a chat message.

- get-embedding; this returns a vector representation of the input that can be easily used by machine learning models.

The minimum component file configuration is as follows:

apiVersion: dapr.io/v1alpha1kind: Componentmetadata:name: <NAME>spec:type: bindings.azure.openaiversion: v1metadata:- name: apiKey # Requiredvalue: "1234567890abcdef"- name: endpoint # Requiredvalue: "https://myopenai.openai.azure.com"

To use this binding, make a POST request to the /bindings/<binding-name> endpoint:

GET http://localhost:<dapr-port>/v1.0/bindings/<binding-name>

and provide a JSON payload:

{"operation": "completion","data": {"deploymentId": "my-model","prompt": "Dapr is","maxTokens":5}}

For more information about this binding, please visit the docs.

Secret stores now supported as pluggable component

Secret stores have been added to the pluggable component model. This means you can now write custom secret store components and use these in your Dapr applications. Other pluggable component types are state stores, pub/sub and bindings. You can write pluggable components in Go or .NET.

Component maturity

The following components have been promoted to stable status:

- Pub/sub: Azure Service Bus Queues

- Binding: Zeebe command

- Binding: Zeebe jobworker

- In-memory pub/sub and In-memory state store components

Component improvements

State stores

-

All transactional state stores now include TTL information when retrieving metadata. Metadata can be retrieved by making a GET request to the metadata endpoint:

GET http://localhost:3500/v1.0/metadata -

The etcd state store component is now at version 2. See the docs for versioning details.

Pub/sub

RabbitMQ can now be configured to create quorum queues, which are designed to be safer and simpler than the classic queues. See the RabbitMQ docs for more information.

In addition, the queue name can be set via subscription metadata.

Bindings

PostgreSQL and MySQL now support parameterized queries to prevent SQL injection attacks.

PostgreSQL components

All PostgreSQL components (binding, configuration store, state store) now support authentication with Azure AD.

Name resolvers

Name resolution is used in service-to-service invocation when the name of a Dapr application needs to be resolved to the location of the corresponding Dapr sidecar. You can now override the default Kubernetes DNS name resolution by adding a Dapr configuration CRD that contains a nameResolution spec:

apiVersion: dapr.io/v1alpha1kind: Configurationmetadata:name: appconfigspec:nameResolution:component: "kubernetes"configuration:clusterDomain: "cluster.local" # Mutually exclusive with the template fieldtemplate: "{{.ID}}-{{.Data.region}}.internal:{{.Port}}" # Mutually exclusive with the clusterDomain field

If you're using any of these components and have a demo to show, drop a message in the #show-and-tell channel on the Dapr Discord.

CLI & Multi App Run

Improvement to Kubernetes developer experience

A new CLI command has been added to improve setting up a dev environment on Kubernetes. Using dapr init -k --dev will deploy a Redis and Zipkin containers to Kubernetes identical to the (local) self-hosted mode. For more information on dapr init, please see the docs.

Specify multiple resource paths

Multiple resource paths can now be used when starting apps with dapr run to allow loading of multiple component/resource files across several locations.

dapr run --app-id myapp --resources-path path1 --resources-path path2

See the Dapr docs, for all the flags available for dapr run.

Windows support for Multi-app Run

Multi-app run allows running multiple Dapr applications in (local) self-hosted mode, with just one CLI command. This feature was introduced in 1.10 with macOS and Linux support. Now with version 1.12, multi-app run can be used on Windows as well.

Multi-app run for Kubernetes

Multi-app run has been extended with a preview feature that allows launching multiple apps with container images on Kubernetes.

You can use this feature by running:

dapr run -k -f .

The command loads a dapr.yaml file that specifies the applications, with their container image resources. Here’s an example that contains a Node service and a Python app:

version: 1common:apps:- appID: nodeappappDirPath: ./nodeapp/appPort: 3000containerImage: ghcr.io/dapr/samples/hello-k8s-node:latestcreateService: trueenv:APP_PORT: 3000- appID: pythonappappDirPath: ./pythonapp/containerImage: ghcr.io/dapr/samples/hello-k8s-python:latest

See the docs for all the configuration options.

What is next?

This post is not a complete list of features and changes released in version 1.12. Read the official Dapr release notes for more information. The release notes also contain information on how to upgrade to this latest version.

Excited about these features and want to learn more? I'll cover the new features in more detail in future posts. Until then, join the Dapr Discord to connect with thousands of Dapr users.